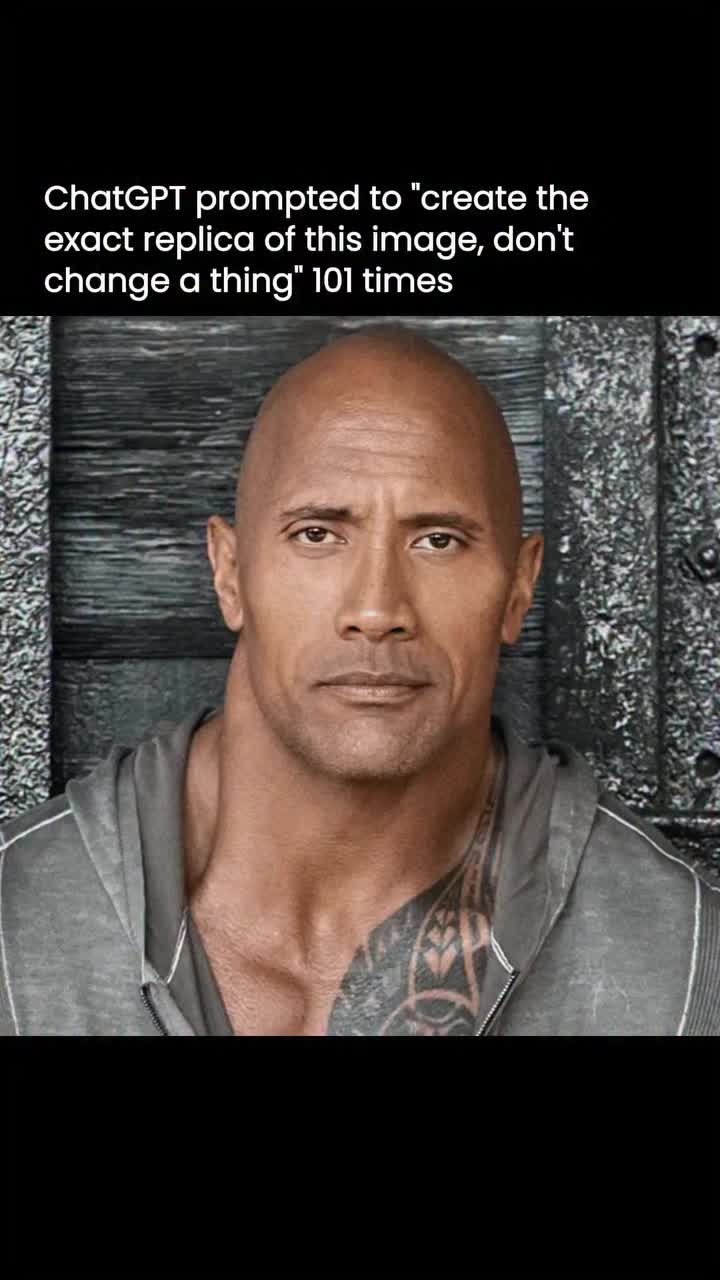

ChatGPT was asked to recreate the same photo perfectly with zero changes. Then it was asked again. And again. One hundred and one times in a row. Each generation drifts a little further from the original. Tiny errors in the face, colors, and shapes build up until the final results barely resemble what you started with. This happens because AI is not copying pixels. It is interpreting patterns. And when a reinterpretation gets reinterpreted over and over, the noise compounds. Researchers call this model collapse. It shows what happens when AI learns from its own outputs instead of real data. Diversity drops, accuracy breaks, and the whole system spirals into distortion. ➡️ Comment “Newsletter” to join thousands of readers getting the best AI news, prompts, and tools for free 🎥: u/Foreign_Builder_2238 #ai #artificialintelligence #chatgpt #technology

♬ original sound – VivaLaCareta

@vivalacareta ChatGPT was asked to recreate the same photo perfectly with zero changes. Then it was asked again. And again. One hundred and one times in a row. Each generation drifts a little further from the original. Tiny errors in the face, colors, and shapes build up until the final results barely resemble what you started with. This happens because AI is not copying pixels. It is interpreting patterns. And when a reinterpretation gets reinterpreted over and over, the noise compounds. Researchers call this model collapse. It shows what happens when AI learns from its own outputs instead of real data. Diversity drops, accuracy breaks, and the whole system spirals into distortion. ➡️ Comment “Newsletter” to join thousands of readers getting the best AI news, prompts, and tools for free 🎥: u/Foreign_Builder_2238 #ai #artificialintelligence #chatgpt #technology